- Home

- Documentation

- Evaluation

- Disparate Impact Analysis

Disparate Impact Analysis

Disparate impact analysis (DIA) also referred to Adverse Impact analysis is a technique to evaluate fairness. It is used to notify when members of a protected group or minority (e.g gender, praticulare race etc) receive unfavorable decisions, in lending, an example would be whether a person is denied or accepted a loan. To conduct a DIA many different statistical values are calculated from confusion matrices. These metrics will help us understand the model overall performance and how it behaves when predicting:

- Bad correctly

- Good correctly

- Bad incorrectly

- Good incorrectly

To be able to calculate confusion matrices we need to translate the model prediction to a decision. To make a decision using a model-generated predicted probability, a numeric cutoff must be specified, which decides if an applicant will default or not. Cutoffs play a crucial role in DIA, since they impact the underlying statistics to calculate disparity.

There are many accepted ways to set the cutoff score, but when working with imbalanced data, which is very common in the finance industry, it is sometimes seen as a more robust way to use the maximized F1 score, since it gives a good balance between sensitivity and precision.

To calculate disparity we compare the confusion matrix for each class for a user-defined reference level and to user-defined thresholds. In this example we have used F1 score as a user-defined threshold and the user-defined reference we have chosen is according to the four-fifths rule.

That means that thresholds are set such that metrics 20% lower or higher than the reference level metric will be flagged as disparate. Below you will see a table of all the statistics that is calculated from a confusion matrix.

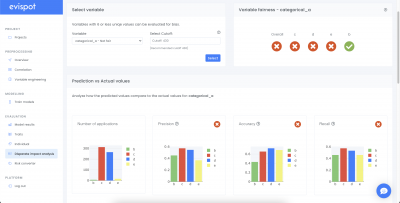

In the evispot AI platform, a user can conduct the disparate impact analysis, by navigating to the disparate impact tab from the evaluation view. A user can switch between different variables and choose a different cutoff than the default, maximized F1 score. Users are encouraged to consider the explanation dashboard to understand and augment results from DIA. In addition to its established use as a fairness tool, users may want to consider disparate impact for broader model debugging purposes. For example, users can analyze the supplied confusion matrices and metrics for important, non-demographic variables.

Company

© Evispot 2022 All rights reserved.